What is Hadoop?

Like we discussed in last blog, Big Data is not just Hadoop. Similarly Hadoop is not one only monolithic thing, but is an ecosystem which consists of various hadoop components and an amalgamation of different technologies.Like HDFS (Hadoop Distributed File System), Map Reduce, Pig, Hive,Hbase, Flume and so on.

But What makes Hadoop so special ? Basically , Hadoop is a way of storing enormous data sets across distributed clusters of servers and then running, "distributed" analysis in each cluster. It is designed to be robust, ie your Big Data applications will continue to run even when individual servers or clusters fail. And it’s also designed to be efficient, because it doesn’t require your applications to shuttle huge volumes of data across your network.

Hadoop Ecosystem

We can combine Hadoop components in many ways, but most of all HDFS and Map reduce constitute a technology system to support application with large data sets in BI/ DW and analytics. The other Hadoop projects like Impala, which being an SQL engine supports BI/DW by providing low latency data access to HDFS and Hive data.

Below image describes the Hadoop Ecosystem.

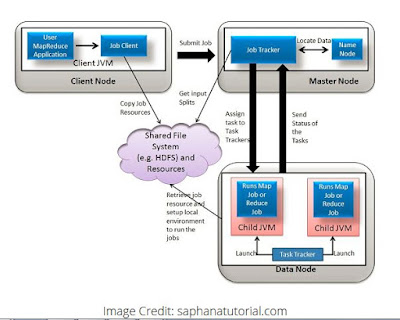

Today's view of Hadoop architecture gives prominence to Hadoop common, YARN, HDFS and MapReduce.

1) Hadoop Common refers to the collection of common utilities ,libraries,OS level abstraction, necessary Java files and scripts that support other Hadoop modules. It is an essential part or module of the Apache Hadoop Framework.Hadoop Common is also known as Hadoop Core.

2) Hadoop YARN is described as a clustering platform or framework that helps to manage resources and schedule tasks.It is a great enabler for dynamic resource utilization on Hadoop framework as users can run various Hadoop applications without having to bother about increasing workloads. The Apache software foundation, the license holder for Hadoop, describes Hadoop YARN as 'next-generation MapReduce’ or 'MapReduce 2.0.’

3) HDFS is a distributed file system that runs on standard or low-end hardware. Developed by Apache Hadoop, HDFS works like a standard distributed file system but provides better data throughput and access through the MapReduce algorithm, high fault tolerance and native support of large data sets.

HDFS comprises of 3 important components-NameNode, DataNode and Secondary NameNode. HDFS operates on a Master-Slave architecture model where the NameNode acts as the master node for keeping a track of the storage cluster and the DataNode acts as a slave node summing up to the various systems within a Hadoop cluster.

It provides data reliability by replicating each data instance as three different copies - two in one group and one in another. These copies may be replaced in the event of failure.

Default replication is 3

• 1st replica on the local rack

• 2nd replica on the local rack but different machine

• 3rd replica on the different rack

The HDFS architecture consists of clusters, each of which is

accessed through a single NameNode software tool installed on a separate

machine to monitor and manage the that cluster's file system and user

access mechanism. The other machines install one instance of DataNode to

manage cluster storage.

Because HDFS is written in Java, it has native support for Java application programming interfaces (API) for application integration and accessibility. It also may be accessed through standard Web browsers.

Because HDFS is written in Java, it has native support for Java application programming interfaces (API) for application integration and accessibility. It also may be accessed through standard Web browsers.

4) MapReduce is a programming model introduced by Google. It breaks down a big data processing job into smaller tasks. It is responsible for the analyzing large data-sets in parallel before reducing it to find the results. In the Hadoop ecosystem, Hadoop MapReduce is a framework based on YARN architecture. YARN based Hadoop architecture, supports parallel processing of huge data sets and MapReduce provides the framework for easily writing applications on thousands of nodes, considering fault and failure management.

It is highly scaleable & has several forms of implementation provided by multiple programming languages, like Java, C# and C++. )

The MapReduce framework has two parts:

- A function called "Map," which allows different points of the distributed cluster to distribute their work

- A function called "Reduce," which is designed to reduce the final form of the clusters’ results into one output

A task is transferred from one node to another. If the master node notices that a node has been silent for a longer interval than expected, the main node performs the reassignment process to the frozen/delayed task.

As discussed above, there are several other Hadoop components that form an integral part of the Hadoop ecosystem with the intent of enhancing the power of Apache Hadoop in some way or the other like- providing better integration with databases, making Hadoop faster or developing novel features and functionalities. To know further about some of the eminent Hadoop components used by enterprises extensively, please read my Next Blog.

To continue further to understand how Hadoop works, please read my blog

No comments:

Post a Comment

Please drop your valuable feedback !!